Sami AZ

Sami AZ

Voice to text apps and dictation tools have been around for decades. From early speech engines on desktop to on-device dictation in iOS and Android, the baseline is capturing spoken words and turning them into text. But in 2025, that is no longer enough.

What teams need now is automation layered on top: context, structure, action extraction, integration into workflows. In other words, AI meeting assistants that do more than transcribe.

In this article, we will:

The basic idea: capture voice, convert to text. In 2025, many apps do this well. Some key players:

These tools do well for capturing solo voice, dictating documents, or transcribing recorded audio. But they lack deep workflow intelligence, which is where AI meeting assistants come in.

To appreciate why AI meeting assistants are winning, we must understand what dictation tools cannot do (or do poorly).

They capture words, but they don’t separate decisions, tasks, or follow-ups. You still need to read and manually pick out what matters.

A dictation tool doesn’t know who is speaking, the topic thread, which points are binding decisions, or what is optional.

They generally don’t push output into Slack, Notion, CRM, or task systems automatically. You copy, paste, attach, manual work remains.

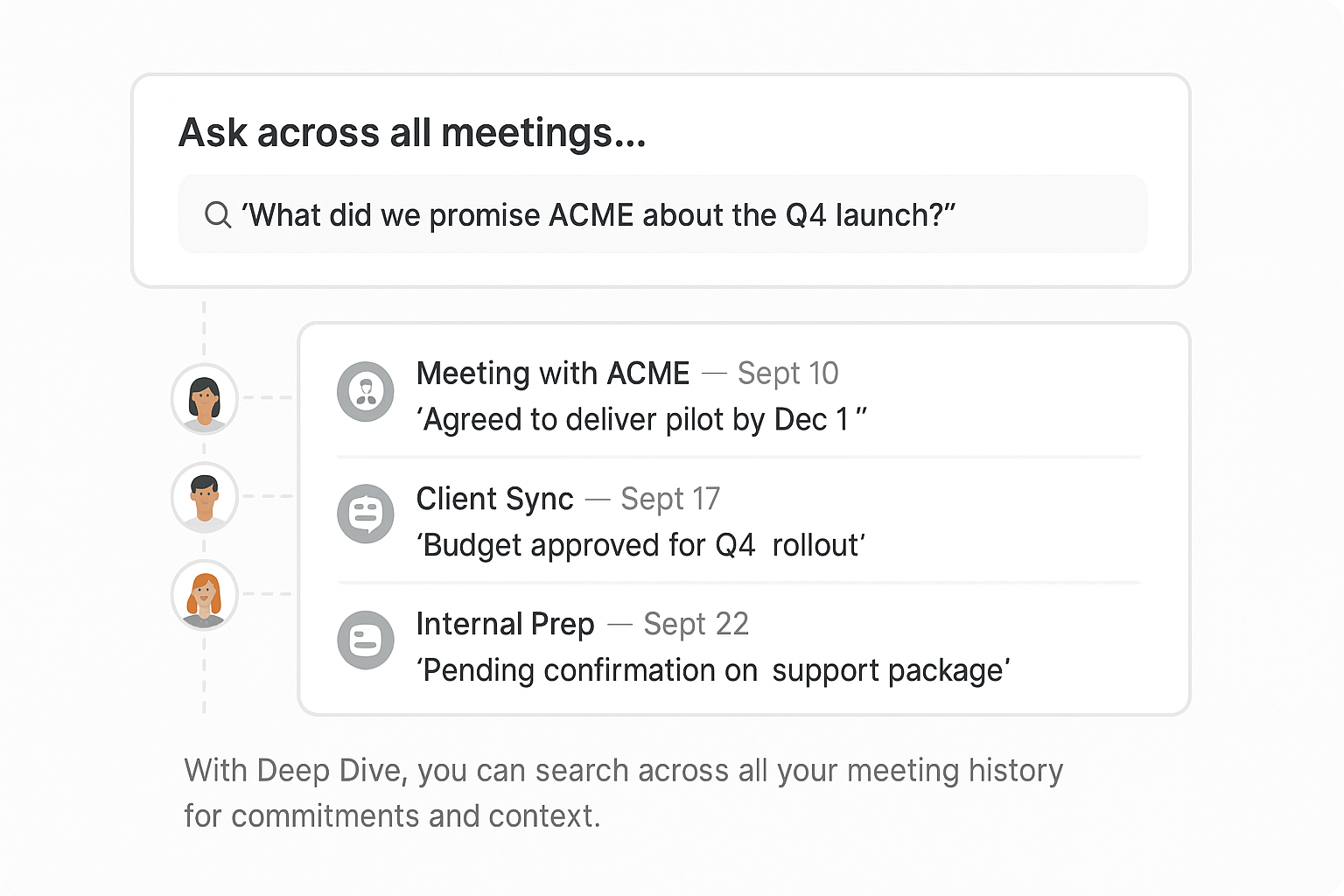

They don’t support cross-meeting queries like “What did we agree with Company X two months back?”

If integration fails or wrong mapping, many dictation tools don’t provide alerts or correction workflows.

They often lack audit logs, field-level access, or compliance controls.

Because of these limitations, many teams find themselves layering additional tools or building manual glue. That creates complexity and error risk.

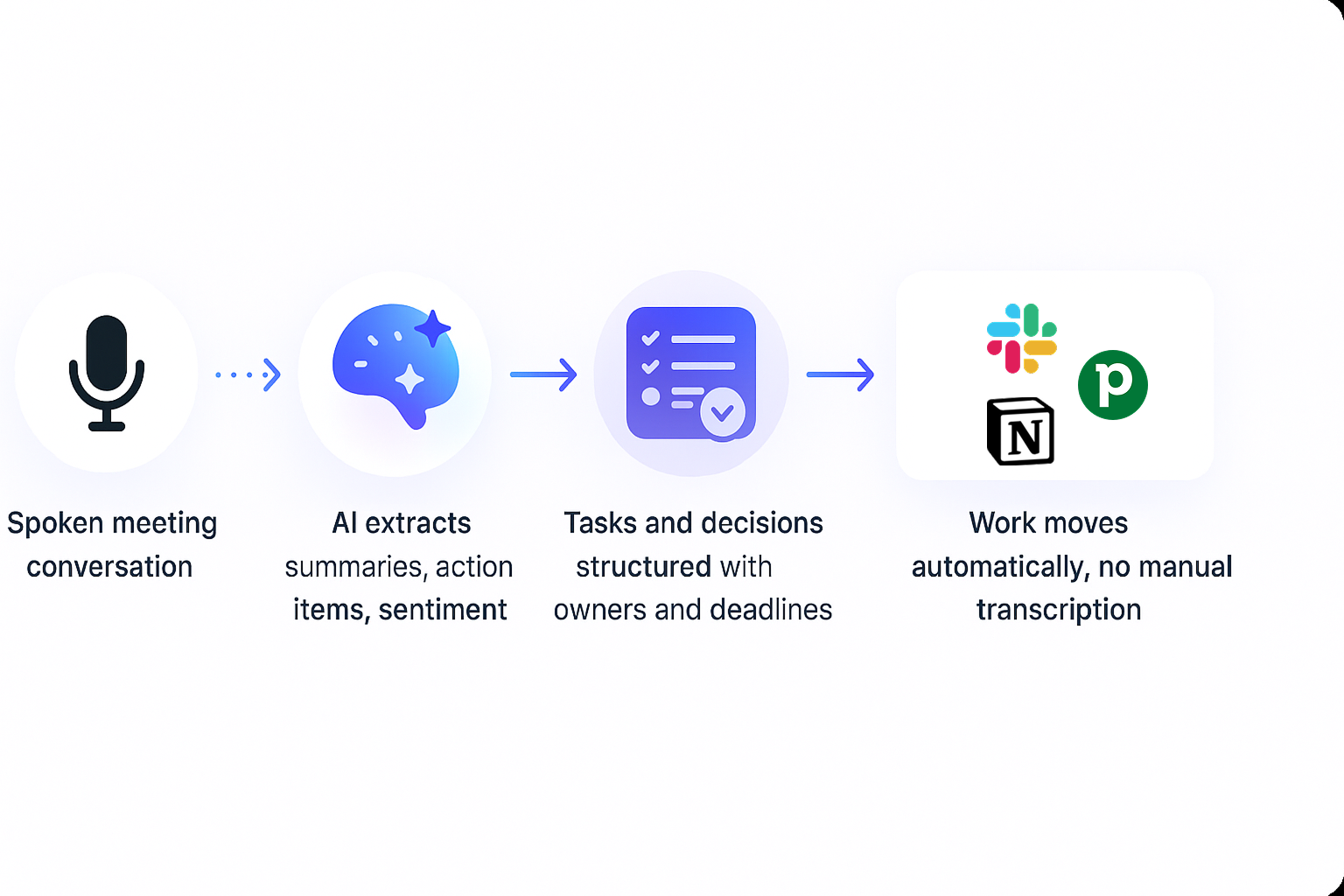

AI meeting assistants build on voice-to-text but add several critical capabilities:

In other words, AI meeting assistants bridge the gap between capturing speech and activating work.

When comparing traditional voice-to-text or dictation tools with AI meeting assistants, the differences become clear across several important criteria.

Voice-to-text tools are primarily designed to capture spoken words accurately, which they generally do well. However, they struggle to identify speakers or understand context, in most cases, this capability is either absent or very limited. They also do not extract decisions or action items from conversations, leaving users to manually review transcripts. Similarly, tasks such as assigning deadlines or syncing notes with workflow tools require manual effort, as voice-to-text systems lack automation and integration features.

AI meeting assistants, on the other hand, go far beyond simple transcription. They not only capture words but also recognize speakers, interpret context, and extract key takeaways such as decisions, tasks, and action points. These assistants can automatically assign deadlines, synchronize workflows with tools like Slack, Notion, or Trello, and even provide cross-meeting recall, allowing users to reference insights from previous discussions seamlessly.

Additionally, AI meeting assistants handle alerts and error management intelligently, ensuring smoother operations. They also provide robust governance, permissions, and activity logs, offering transparency and security that traditional transcription tools lack.

Because of these differences, teams that transition from basic voice-to-text apps to AI meeting assistants experience a significant leap in productivity, automation, and overall reliability.

Below is a sampling of well-known dictation / voice-to-text tools in 2025, with objective strengths and what they miss relative to AI assistants.

When you use voice to text apps for meetings, you will need to add multiple manual steps. AI meeting assistants aim to eliminate those steps.

Here is where Klu can differentiate:

Klu should focus not just on capture, but orchestration: meeting → summary → task → sync → alert. The chain is automated.

You set types (client call, brainstorm, retrospective). Each template defines what is extracted and where it sends content.

Data flows into your tools. You don’t leave Klu to move work, Klu pushes work.

Ask a question like “What did we commit to Acme two weeks ago?” and get backed, structured answers.

If an integration fails, you get notified. You can correct mapping and system improves. Access control and logging ensure security.

Klu flags language like “blocked,” “delay,” “budget issue,” “urgent,” or negative tone. With these features, Klu bridges the gap between speech and execution.

Try Klu Free today to see how automation can level up your remote team meetings.

Q: Can I just use voice-to-text and build my own workflow?

Yes you can, but you’ll need glue, scripts, automations, manual checks. The complexity and fragility increase. AI meeting assistants absorb that work.

Q: Are voice to text apps more accurate than AI assistants?

Some dictation tools are excellent in controlled environments. But AI assistants are catching up, and their context awareness makes their output more usable.

Q: Can AI meeting assistants replace dictation apps?

Yes. For meeting use cases, they provide all dictation + workflow. You only need a pure dictation app for scenarios outside meetings if ever.

Q: Do AI meeting assistants work offline?

It depends. Some features may need cloud connection. Design for hybrid use is ideal.

Q: Will Klu support both meeting voice capture and dictation for writing?

That is a viable product area. But combining meeting logic + dictation is powerful, Klu should support both.

Q: How does Klu handle accents, noise, overlap, speaker separation?

Klu uses advanced models and fallback corrections. You can adjust transcripts or enforce speaker mapping rules.

Q: Can Klu sync past recordings you have in dictation apps?

Yes, Klu should support backfill. You can upload transcripts or recordings to structure them.

Q: What is the ROI improvement from using AI meeting assistants vs voice-to-text apps?

Teams report saving hours per meeting, eliminating follow-up failures, and reducing context confusion, the productivity gain is exponential.